I am a very novice user of LaTeX compared to the experienced members present on this site. I have often seen some fuming conversations on the new LaTeX3 updates in [this](https://topanswers.xyz/tex) chat room. After reading those chats I always feel that common users (like me) really know nothing about what's really happening in the community. Fortunately we have members from the L3 team as well as some critiques of the new changes. It would be good if these two sides articulate what's going on behind the scenes and pros and cons of it. Also should a common user really worry about these changes or not? It would be great if l3 experts show small examples of what actually can be done with expl3 which was not possible earlier.

I didn't want to write an answer but this appears to be too long for a comment (can't see the bottom of it anymore...).

I fully agree with [Skillmon](https://topanswers.xyz/transcript?room=1462&id=76781#c76781) here. My advice to the OP, if you have enough time:

1. Read the TeXbook and program some things in TeX. At this point, you should understand the difficulties this entails (basic data structures, parsing of “text,” flow control, floating point computations that exceed 16383.99999...). In many cases, the life saver is an e-TeX or pdfTeX primitive, if not LuaTeX entirely—all of which aren't part of Knuth's TeX, thus outside the scope of the TeXbook.

2. Look at how LaTeX2e makes this easier: not much. Some *packages* can help a little bit (`etoolbox`, `xifthen`, `intcalc` and a few other packages from Heiko Oberdiek; the PGF bundle...). Apart from PGF, it's all scattered. Even PGF is scattered in some places (think about usage of the `fpu` in `pgfmath`, when trying to do smart things with `pgfplots`: it's always doable but can be tricky).

3. Read [expl3.pdf](http://mirrors.ctan.org/macros/latex/contrib/l3kernel/expl3.pdf), skim through [l3styleguide.pdf](http://mirrors.ctan.org/macros/latex/contrib/l3kernel/l3styleguide.pdf), except section 2, which is for people using `l3doc` for literate TeX programming; and finally, read the parts of [interface3.pdf](http://mirrors.ctan.org/macros/latex/contrib/l3kernel/interface3.pdf) that you need for a given task. There you'll see many high-level functions easy to use and *cooperating* with each other, some unified programming environment. For instance, when `\regex_extract_once:nnNTF` extracts match groups for a regular expression, it uses a `seq` variable to store the matched tokens and the groups. `seq` variables are standard `expl3` sequences, so if you had already learnt to work with them, it's very easy to use the result of `\regex_extract_once:nnNTF` (you have `\seq_get_left:NN` to retrieve the first element and store it in a macro aka `tl` var, `\seq_pop_left:NN` to do the same and remove the element, `\seq_item:Nn` to expandably get the *n*th item in the input stream, where *n* is an arbitrary *integer expression* as described in the `l3int` chapter of the same [interface3.pdf](http://mirrors.ctan.org/macros/latex/contrib/l3kernel/interface3.pdf), e.g., `5 +

4 * \l_my_int - ( 3 + 4 * 5 )` with standard priority rules, etc.). It's all like that, unity.

4. Don't fear wasting time learning TeX programming before `expl3`: TeX is such a strange beast, that knowing its essential rules is probably a must in order to make sense of the `expl3` docs. (I think it helps a lot to understand the difference between expansion and execution(*), the effect of grouping, the difference between local and global assignments, to realize that `x` expansion is what `\edef` does, `e` expansion is what `\expanded` does, `\exp_not:n` is `\unexpanded`, `f` expansion is what you would obtain after `` \romannumeral`\^^@ ``, `o` expansion is what you would do with an `\expandafter`, that TeX always expands tokens recursively when processing a `\csname`, reading a ⟨number⟩, a ⟨dimen⟩ or a ⟨glue⟩, etc).

The problem with the TeXbook for this kind of learning is that its goal is not to teach TeX programming, rather typesetting using TeX for trained professionals. It does teach a lot that is useful for programming, but in order to really understand the points made by the author, you may need to read some things very carefully, twice or more, and sometimes use a search engine or experiment by yourself to understand things better.

I'm definitely not an `expl3` expert, but an example of some task that `expl3` makes easy to perform efficiently could be: “[How to count *n* things?](https://topanswers.xyz/tex?q=1291)”. My solution there uses very natural `expl3` tools to:

- incrementally build a mapping from each of the obtained values to the number of times it was obtained (I use a “property list,” aka `l3prop`);

- create a sequence of “ordered pairs” of the form `{n}{count}` from it, and very simply sort it using the first component of each ordered pair as a sorting criterion (one could as easily sort it according to the second component, since `\seq_sort:Nn`'s second argument tells how two elements compare to each other, and `\seq_sort:Nn` doesn't assume anything about the elements of the sequence);

- iterate over the sorted sequence to build a `tl` var—a simple macro—that contains the interesting data (frequencies obtained by dividing each *count* by the number of samples), properly formatted for direct use inside the table we wanted to produce.

Another thing that is highly non-trivial to do without `expl3`, IMHO: what the `l3regex` module can do: it is much more than a “simple” regular expression engine, which pdfTeX's `\pdfmatch` primitive provides (and would already be quite difficult to implement with Knuth's TeX). `l3regex` has been designed to handle the particular nature of TeX's input: not a stream of characters, but a stream of tokens, namely:

- explicit character tokens with a character and a category code attached;

- control sequence tokens.

`l3regex` regular expressions allow one to match against precise tokens, not just character codes. That is why the syntax is a bit more complicated than for traditional regexps, which many people already find difficult. But if you understand the nature of TeX's input stream after the tokenization stage, are used to regular expressions in other languages (Perl, Python, sed, Bash, C++...) and read the `l3regex` documentation which has many examples, it should all make sense!

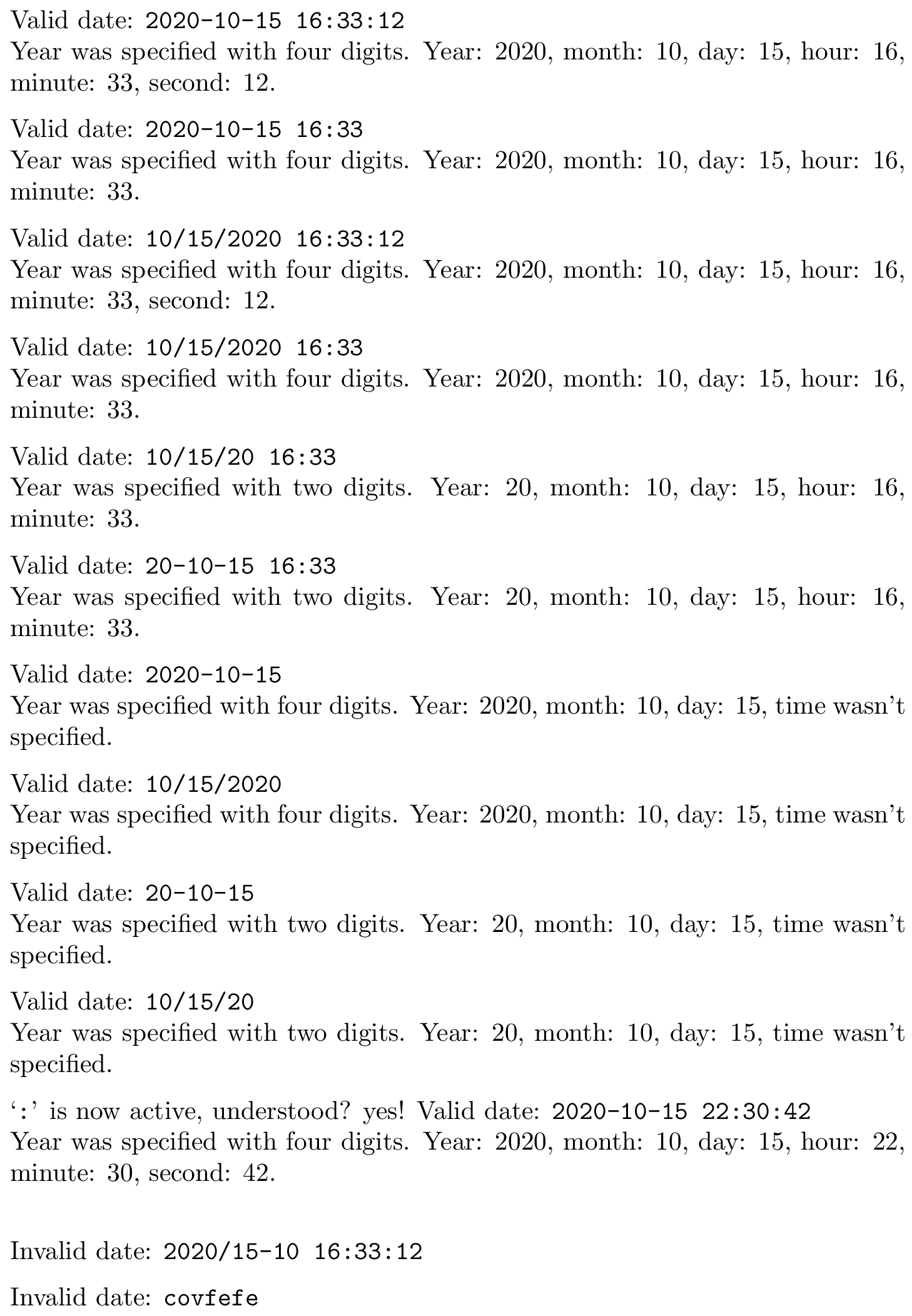

For instance, regexps make it trivial to parse a date in (YY)YY-MM-DD HH:MM:SS format. As an example of the power of regexps, let's make this more complicated and define a single regular expression that can validate, in a single call:

- a date in (YY)YY-MM-DD format (“ISO” style, except I believe actual ISO 8601 mandates four-digit years);

- a date in MM/DD/(YY)YY format (“US” style);

- a date in either of these formats followed by at least one space, then by a time in HH:MM(:SS) format.

Of course, parenthesized things are optional in this informal description. I won't verify that hours are < 24, minutes < 60, etc. to avoid making the thing too long (it is already a bit...). All the rest is verified. With the `l3regex` functions, one could very easily go further and for instance count all occurrences of such a “datetime” in a given token list regardless of the grouping level it appears in, replace all such occurrences, etc.

```

\documentclass{article}

% When these are uncommented and compilation is done with pdflatex, ':' is an

% active character in the whole 'document' environment.

% \usepackage[T1]{fontenc}

% \usepackage[french]{babel}

\usepackage{xparse} % not necessary with LaTeX 2020-10-01 or later

\ExplSyntaxOn

% Compile the regexp (optional; speeds things up if it is used many times).

\regex_const:Nn \c_my_datetime_regex

{

\A % anchor match at the start of the “string”

( (\d{2} | \d{4}) (\-) (\d\d) \- (\d\d) |

% 1 2 3 4 5 <--- captured group numbers

(\d\d) \/ (\d\d) \/ (\d{2} | \d{4})

% 6 7 8

)

% optionally followed by: one or more spaces plus time in HH:MM(:SS) format

% The ':' separators can have category Other (12) or Active (13).

(?: \ + ( (\d\d) \c[OA]\: (\d\d) (?: \c[OA]\: (\d\d) )? ) )?

% 9 10 11 12

\Z % we must be at the end of the “string”

}

\seq_new:N \l__my_result_seq

% Return match group number #1 (an integer expression) from \l__my_result_seq

\cs_new:Npn \__my_group:n #1 { \seq_item:Nn \l__my_result_seq { 1 + (#1) } }

% Generate conditional variants \tl_if_empty:eF and \tl_if_empty:eTF

\prg_generate_conditional_variant:Nnn \tl_if_empty:n { e } { F, TF }

% Is the date in US or in ISO 8601 style?

\prg_new_conditional:Npnn \my_if_US_style: { T, F, TF }

{

\tl_if_empty:eTF { \__my_group:n { 3 } }

{ \prg_return_true: }

{ \prg_return_false: }

}

% Define \__my_get_year:, \__my_get_month: and \__my_get_day:.

% The year is captured group number 8 in US style, number 2 in ISO, style, etc.

\clist_map_inline:nn { { year, 8, 2 }, { month, 6, 4 }, { day, 7, 5 } }

{

\cs_new:cpn { __my_get_ \clist_item:nn {#1} { 1 } : }

{

\my_if_US_style:TF

{ \__my_group:n { \clist_item:nn {#1} { 2 } } }

{ \__my_group:n { \clist_item:nn {#1} { 3 } } }

}

}

% Define \__my_get_{time, hour, minute, second}:.

% Yes, one could simply use what precedes, but what follows will be faster upon

% use, since there is no condition to evaluate.

\clist_map_inline:nn

{ { time, 9 }, { hour, 10 }, { minute, 11 }, { second, 12 } }

{

\cs_new:cpn { __my_get_ \clist_item:nn {#1} { 1 } : }

{

\__my_group:n { \clist_item:nn {#1} { 2 } }

}

}

\cs_new_protected:Npn \__my_print_verbatim_date:n #1

{

% \tl_to_str:n neutralizes catcodes (':' could be active in French...)

\texttt { \tl_to_str:n {#1} }

}

\cs_generate_variant:Nn \tl_count:n { f } % generate variant \tl_count:f

\cs_new_protected:Npn \my_describe_datetime:n #1

{

\regex_extract_once:NnNTF \c_my_datetime_regex {#1} \l__my_result_seq

{

Valid~date:~\__my_print_verbatim_date:n {#1} \\

Year~was~specified~with~

\int_case:nnF { \tl_count:f { \__my_get_year: } }

{

{ 2 } { two }

{ 4 } { four }

}

{ this~place~is~unreachable }

\c_space_token digits. \c_space_token

Year:~ \__my_get_year: , \c_space_token

month:~ \__my_get_month: , \c_space_token

day:~ \__my_get_day: , \c_space_token

\tl_if_empty:eTF { \__my_get_time: }

{ time~wasn't~specified. }

{

hour:~ \__my_get_hour:, \c_space_token

minute:~\__my_get_minute:

\tl_if_empty:eF { \__my_get_second: }

{ ,~ second:~ \__my_get_second: }

. % final period. :-)

}

}

{ Invalid~date:~\__my_print_verbatim_date:n {#1} }

\par\medskip

}

% Document-level command

\NewDocumentCommand \myDescribeDateTime { m }

{ \my_describe_datetime:n {#1} }

\ExplSyntaxOff

\begin{document}

\setlength{\parindent}{0pt}

\myDescribeDateTime{2020-10-15 16:33:12}

\myDescribeDateTime{2020-10-15 16:33}

\myDescribeDateTime{10/15/2020 16:33:12}

\myDescribeDateTime{10/15/2020 16:33}

\myDescribeDateTime{10/15/20 16:33}

\myDescribeDateTime{20-10-15 16:33} % not ISO 8601

\myDescribeDateTime{2020-10-15}

\myDescribeDateTime{10/15/2020}

\myDescribeDateTime{20-10-15}

\myDescribeDateTime{10/15/20}

{ % Make ':' active as with \usepackage[french]{babel} under pdfTeX.

\catcode`\:=13 \def:{yes!} `\texttt{\string:}' is now active, understood? :

\myDescribeDateTime{2020-10-15 22:30:42}

}

\bigskip

\myDescribeDateTime{2020/15-10 16:33:12} % incorrect

\myDescribeDateTime{covfefe} % also incorrect

\end{document}

```

Another example could be [this answer of Skillmon](https://tex.stackexchange.com/a/567676/73317) where you can compare an `expl3` approach with one based on LaTeX2e, and decide for yourself which one is easier to read and write.

(*) Unfortunately, Knuth prefers to talk about “mouth” and “stomach” instead of expansion and execution in the TeXbook, but this is exactly the same. This just illustrates the fact that, IMHO, these things were not the main objectives of the book for him.I know this is an old question, and I know that I probably should just let things be.

I don't want to start a new discussion on this, but I tried to ignore the points [in this answer](https://topanswers.xyz/tex?q=1420#a1663) in the hopes that things would turn out for the best (which they didn't). I now think that completely ignoring this was probably not a good decision. This here is still not an answer to the original question "What's going on in LaTeX?". It is just an extended comment on the aforementioned answer, trying to give my point of view on the points mentioned there, as well as objective information. The two things (opinion and objectivity) usually don't mix well, I'll try to highlight objective stuff as such, the rest is *my opinion*.

So here is my take on this:

1. **Objective:**

> [...] `expl3`, which will eventually become `l3` without `exp`, which originally stood for experimental.

and

> At the end of the trial there should be a decision whether or not this is the way to go. IMHO this decision should be a democratic decision by the user base.

It is true that the `exp` in `expl3` stood for experimental. But `expl3` will not become `l3`. This was experimental more than a decade ago (originally the name was forged in the early 90ies, so more like three decades ago). It was reworked and vastly improved more than a decade ago, got adapted by many packages which in turn were used by many people (because those packages were doing stuff pretty well that many people needed or wanted). Because of the broad usage the "experimental" in `expl3` turned into something that had to stay if the maintainers didn't want to break stuff for *many* users.

As a result the "poll" was done by the user base via their usage of packages and package authors (who invested many hours into improving the LaTeX ecosystem for all of us) many years ago. The exact moment in time can't be really pointed out (at least not by me), but I reckon it was probably somewhat around 2013 or 2014 when the `experimental` notion was dropped from `expl3`, and the thing couldn't be removed from the LaTeX-world anymore.

2. There was enough discussion about this point already. You can do stuff with `expl3` that you can't do with plain TeX without implementing much functionality yourself, turning your small idea into a big adventure of TeX-pitfalls (fun for some, frustrating for many). Still, everything you can do with `expl3` you can do without it (in a logical/mathematical sense, in practice only few would).

3. My point here is mostly about the

> I find it just hard to believe that it is possible to write packages like unravel and at the same time it is hard to develop tools that allow one to spot these spaces more easily.

Could those tools be developed? Yes. But **objectively** the used method comes with close to no performance overhead (the overhead being the catcode setup inside `\ExplSyntaxOn` and `\ExplSyntaxOff`) fixing the spurious space issue completely. No tool one would have to explicitly run and that could spot false-positives or false-negatives because of TeX's weirdness. It's simply `~` is a space now, "normal" spaces are ignored. And since `~` is not category code active inside `\ExplSyntaxOn` the statement

> you can use ~ unless they are used otherwise already

is not applying. Any definition done to an active `~` on other spots doesn't apply inside `\ExplSyntaxOn`.

4. That point is quite long. Let's split it up a bit.

> Imposed also by some of those who implemented somewhat random patterns regarding the usage `@`

**Subjective:** Maybe because they realised that vowel-replacement of `@` was a bad idea (**objective:** done not only by them, but also by Knuth long before they started to use (La)TeX, see the plain definition of `\newdimen` using a register named `\insc@unt`)

> I’d think that if you make these drastic changes to the kernel then you surely will be able to write a macro or switch that keeps track of that

**Objective:** There is a switch to test that, it is the `debug` option. *But* this comes at a heavy performance hit, simply naming your code to distinct global vs. local comes *free of charge*.

> the threshold is much higher than it used to be because now one has to be familiar with two different philosophies

**Objective and subjective:** Compared to the overall number of users there aren't too many who understand plain TeX or LaTeX2e code. But, imho, understanding `expl3`-code is easier. It is true however that it's another language (or dialect) one has to learn if one wants to understand it.

> I bring this up in order to question the narrative that expl3 code is automatically clean and superior. IMHO the elegance of a code depends on the abilities of the author.

Agreed on the point that elegance of code depends on the author's ability. Question is whether a language design is encouraging more structure and hence easier to read and maintain code. `expl3` tries to give such structure which encourages cleaner code. Of course simply that doesn't automatically result in good code (see my `ducksay` package, the first version of it was horribly slow and had quite serious limitations, the second iteration is much faster, more versatile, and doesn't depend on nice user input, because I learned a lot of stuff in the meantime).

5. I agree, that wording of the documentation was poor.

6. Fully agree there is a lack of official introductory documentation on `expl3`. There is an introduction to `expl3` by a third party available, I heard it is good, but never read it myself. However, I doubt that the vast majority of users want to actively participate. Which share of users have read through `source2e`? Which share of users do know that it exists? As an interface reference there is `interface3.pdf` which serves the purpose of an interface reference really well. There is also `source3.pdf` which explains most aspects of the implementation. So there is a lot of documentation available (`source3.pdf` has over 1500 pages as of writing this...). However for the most part I agree with this point, introductory material is lacking.

7. This has nothing to do with "What's going on in LaTeX?". It is part of a stupid dispute of a relatively small number of people that I'm really not proud of being part of. No further comment.

8. Yes, LaTeX is attractive, but

> If you want to build a better version of LaTeX, start over from scratch, and do not promise things that you cannot keep just to gain control over a large user base.

This is **objectively** wrong (or misinformed). FMi et al. don't need to promise anything to "gain control over a large user base", FMi et al. created LaTeX2e by making many fundamental changes to LaTeX2.09 (to which they also contributed), among other things creating the package system as we know it.[^1] If you don't want to be under their control, you'd have to use the much older, less tested and less stable LaTeX2.09 for which (as far as I know) no packages (styles, there is no `\usepackage`) are actively maintained. For reference see https://ctan.org/pkg/latex209 (you can download the 2.09 sources there).

[^1]: This is not meant to reduce Lamport's achievements. At it's time pre-2e LaTeX was brilliant, making TeX much more accessible for the masses.There is already a post highlighting the strengths of `expl3`, which will eventually become `l3` without `exp`, which originally stood for experimental. I never said that this scheme is useless or bad because I do not think this is the case. However, I am critical of many developments. Here are a few examples: 1. To me the notion "experimental" seemed to suggest that this is a trial. At the end of the trial there should be a decision whether or not this is the way to go. IMHO this decision should be a democratic decision by the user base. However, we will have to live with `l3` without any poll. (Just to prevent statements saying that this is impossible: I am also an arXiv user, and the arXiv does such polls.) 2. It already has been mentioned that there is nothing in `expl3` that cannot be done within (La)TeX "only". This is a true statement because it is just a layer on top. The more relevant question should be whether or not there are things in TeX that cannot be done with `expl3` only. I do not know the answer, but presumably you can always import every TeX primitive you want and make things work, somehow. 3. It is hard to ignore the fact that `expl3` treats spaces in a peculiar way. This means, in particular, that you cannot blend in pgf keys easily, which can contain spaces. (To those who want to make comments: I know that you can use `~` unless they are used otherwise already, and I know what the so-called spurious spaces are. I find it just hard to believe that it is possible to write packages like `unravel` and at the same time it is hard to develop tools that allow one to spot these spaces more easily.) 4. The naming conventions have been mentioned already. One should not forget that these are rules that the users are supposed to follow. Imposed also by some of those who implemented somewhat random patterns regarding the usage `@`. Please note that I, too, want to know, say, if a macro is global or local. However, I'd think that if you make these drastic changes to the kernel then you surely will be able to write a macro or switch that keeps track of that. As of now, there are several packages which contain a wild mix of the old conentions with `@` symbols and underscores, one example being `mathtools`. Not too many users understand these codes, at least the threshold is much higher than it used to be because now one has to be familiar with two different philosophies. However, I do not bring this up to criticize the authors or maintainers of these packages, they did a great job IMHO. I bring this up in order to question the narrative that `expl3` code is automatically clean and superior. IMHO the elegance of a code depends on the abilities of the author. It also depends on making the conventions and tools accessible to many users. 5. I was criticizing this excerpt from `interface3.pdf`  There is [some movement in this regard](https://github.com/latex3/latex3/commit/8a19cc0b29177fed827df99e36b3eb89a36ee427). Of course, I was shouted at for bringing this up (the "discussion" can be found in the chat) but at least the wording has changed. 6. The documentation is suboptimal to say it politely. Various members of the LaTeX3 team have actually signalled to me that they agree with this statement, at least to some extent. I also know that writing a good documentation is real and hard work, and is nontrivial. And I hold those who wrote good manuals in my highest regards. If you think of it, `beamer` and `Ti*k*Z/pgf` are arguably among the most widely used packages, and really have excellent manuals, which is probably not just coincidence. However, saying that the manual is a lot of work is IMHO no good excuse for not providing one if you change the kernel that **every user has to load**. I know that I will receive the usual comments from some experts who tell me that they are OK with the manuals. This is not about them, nor me, but about general users who are wondering "What's going on in LaTeX?". I think that we should not exclude them from actively participating by not providing accessible manuals that also serves newcomers. 7. There is overall an interesting dynamics. When some of the `expl3` enthusiasts run out of arguments, they throw accusations at others like "Like many things in life, it works better when you approach people without accusing them of bad intentions.” or "Obviously you do not read.", and even star these comments. They flag critical comments using reasoning that it is enough if there is one unfortunate way to read it, regardless of whether this was the intention of the comment. At the same time they appear to be surprised if one applies the same logic to flag their comments. That is, it is certainly not me who has the "bad intentions". Sadly, meanwhile even this post got flagged. What should have happened instead IMHO is that the comments accusing me of outrageous things should have been reworded. 8. Finally, I think that the most important piece of information is [this comment](https://topanswers.xyz/transcript?room=1462&id=78362#c78362). I agree that it is too late for rewriting LaTeX in the way it is intended. What makes LaTeX so attractive is IMHO the fact that one can still compile decade-old documents, and that it has been tested over many decades. Once you lose backwards compatibility (of course I am aware of the claims that one does not but you only have to skim through the TeX sites to see that these claims are not true), I do not see how a system which has, due to its age, a rather tight constraint on the maximal dimension and counts, which is not too accurate in computations, nor is parallelizable, can be competitive. If you want to build a better version of LaTeX, start over from scratch, and do not promise things that you cannot keep just to gain control over a large user base.  This list is, by far, not exhaustive. This is also not a real answer to the question. However, I wrote this post just so that there is an alternative to the IMHO overly rosy picture that gets painted elsewhere. I am writing it merely to say that there are also some who are critical and not convinced that what is currently been done will help LaTeX to keep its large user base.